| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| 13 | 14 | 15 | 16 | 17 | 18 | 19 |

| 20 | 21 | 22 | 23 | 24 | 25 | 26 |

| 27 | 28 | 29 | 30 |

Tags

- ann

- KNN

- 양자컴퓨터

- Cache

- 테슬라

- aqqle

- JPA

- java

- Analyzer

- redis

- api cache

- Selenium

- TSLA

- API

- elasticsearch cache

- aggs

- 아이온큐

- request cache

- mysql

- Docker

- Elasticsearch

- dbeaver

- Query

- NORI

- file download

- Elastic

- Aggregation

- vavr

- IONQ

- java crawler

Archives

- Today

- Total

아빠는 개발자

[Aqqle] 야hoo finance 긁어보자 본문

728x90

반응형

Aqqle 컨셉이 자꾸 바뀌는거 같지만.. 일단 미국주식을 정보를 긁어봐야겠다.

일단 먹잇감은 아래 이녀석이다.

페이징도 있으니.. 넌.. 디졌다.

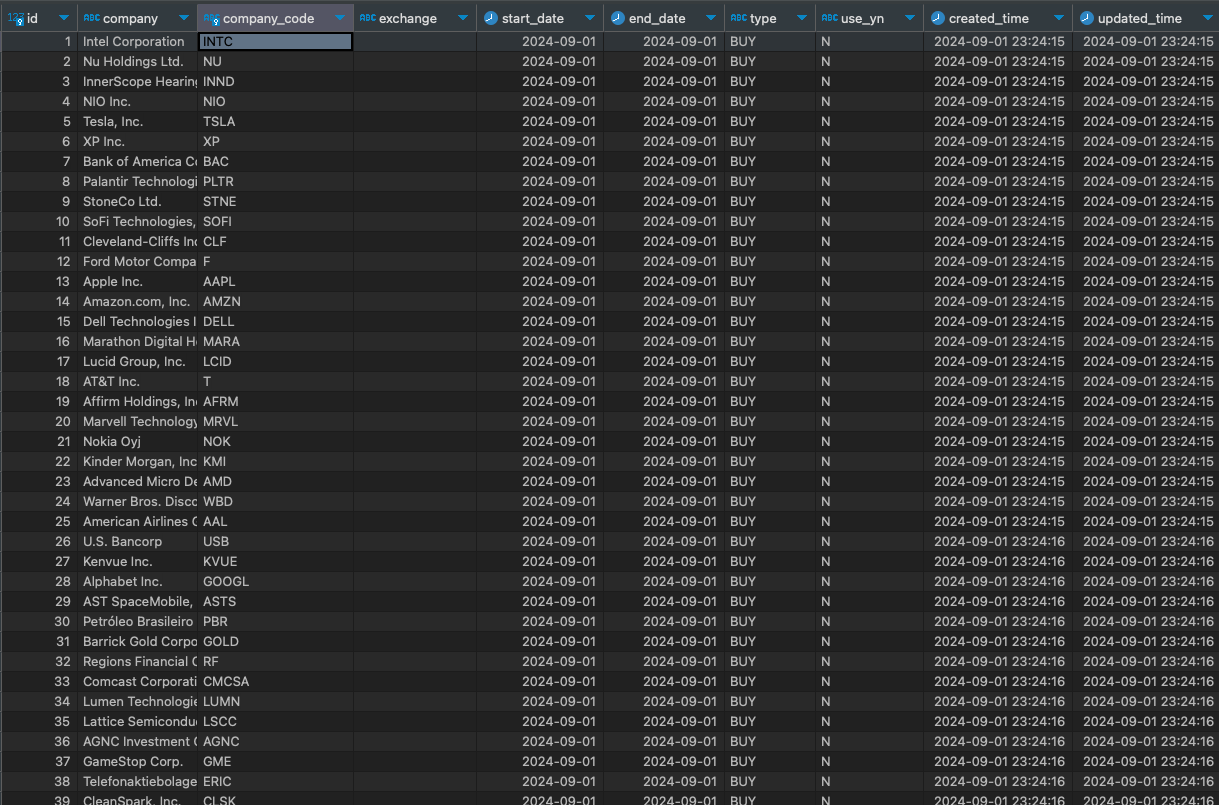

앞에 code 와 longName 을 긁어다가 DB 에 넣고 저 코드로 주가정보 히스토리 파일을 다운받아서 배치로 색인할 예정

일단 긁었다.

package com.doo.aqqle.service;

import com.doo.aqqle.element.Site;

import com.doo.aqqle.factory.SiteFactory;

import com.doo.aqqle.factory.YahooFactory;

import com.doo.aqqle.repository.Stock;

import com.doo.aqqle.repository.StockRepository;

import io.vavr.Tuple;

import io.vavr.Tuple2;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.select.Elements;

import org.springframework.stereotype.Service;

import java.io.IOException;

import java.time.LocalDateTime;

import java.util.List;

import java.util.stream.Collectors;

@Service

public class YahooService extends AqqleService implements AqqleCrawler {

public YahooService(StockRepository stockRepository) {

super(stockRepository);

}

private final int CRAWLING_COUNT = 25;

@Override

public void execute() {

Site site = SiteFactory.getSite(new YahooFactory());

for (var i = 1; i < 200; i++) {

int start = i > 1 ? i * CRAWLING_COUNT: 1;

String listUrl = site.getUrl(start, CRAWLING_COUNT);

try {

Document listDocument = Jsoup.connect(listUrl)

.timeout(5000)

.get();

Elements tableTr = listDocument.select(site.getListCssSelector());

List<Tuple2<String, String>> tableTrs = tableTr.stream()

.map(x -> Tuple.of(

x.select("span.symbol").text(),

x.select("span.longName").text()

))

.collect(Collectors.toList());

tableTrs.forEach(tuple -> {

stockRepository.save(Stock.builder()

.company(tuple._2)

.companyCode(tuple._1)

.exchange("")

.startDate(LocalDateTime.now().toString())

.endDate(LocalDateTime.now().toString())

.type("BUY")

.use("N")

.build());

System.out.println("Symbol: " + tuple._1);

System.out.println("Long: " + tuple._2);

});

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

실행결과

Symbol: INTC

Long: Intel Corporation

Symbol: NU

Long: Nu Holdings Ltd.

Symbol: INND

Long: InnerScope Hearing Technologies, Inc.

Symbol: NIO

Long: NIO Inc.

Symbol: TSLA

Long: Tesla, Inc.

Symbol: XP

Long: XP Inc.

Symbol: BAC

Long: Bank of America Corporation

Symbol: PLTR

Long: Palantir Technologies Inc.

Symbol: STNE

Long: StoneCo Ltd.

Symbol: SOFI

Long: SoFi Technologies, Inc.

훔친물건

장물 1.

189개만 훔치게 됐는데 company_code의 중복오류가 발생했다.. 이 URL 은 중복데이터가 있는 모양인데..

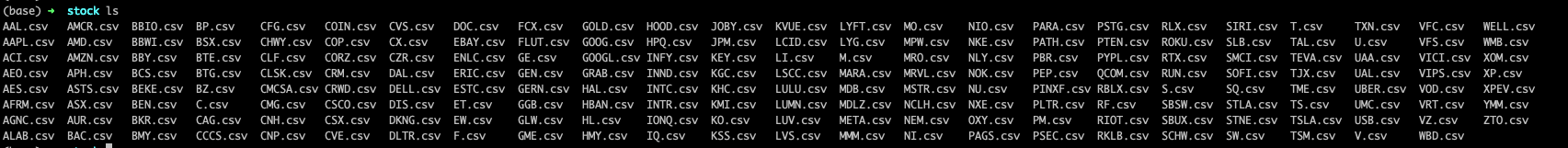

예전에 만들어 놓은 파일 다운로드를 돌려보니..

돌긴돈다

키가 중복이니까 insert 를 upsert 로 바꿔놓고 파일 추출 부분은 extract 부분에서 처리해야겠다..

crawler 는 긁어다가 저장하는 것 까지만

EntityManager 를 사용하는 방법이 있긴하지만...

다루기 쉬운.. Query annotation 를 사용 ( annotation JPQL(Java Persistence Query Language) 또는 네이티브 SQL 쿼리를 정의할 때 사용)

package com.doo.aqqle.repository;

import org.springframework.data.jpa.repository.JpaRepository;

import org.springframework.data.jpa.repository.Modifying;

import org.springframework.data.jpa.repository.Query;

import org.springframework.data.repository.query.Param;

import org.springframework.transaction.annotation.Transactional;

import java.util.List;

public interface StockRepository extends JpaRepository<Stock, Long> {

List<Stock> findAllByUseYn(String use);

@Transactional

@Modifying

@Query(value = "INSERT INTO stock (company, company_code, exchange, start_date, end_date, `type`, use_yn) " +

"VALUES (:company, :companyCode, :exchange, :startDate, :endDate, :type, :useYn) " +

"ON DUPLICATE KEY UPDATE " +

"company = :company, " +

"exchange = :exchange, " +

"start_date = :startDate, " +

"end_date = :endDate, " +

"`type` = :type, " +

"use_yn = :useYn", nativeQuery = true)

void upsert(

@Param("company") String company,

@Param("companyCode") String companyCode,

@Param("exchange") String exchange,

@Param("startDate") String startDate,

@Param("endDate") String endDate,

@Param("type") String type,

@Param("useYn") String useYn

);

}

수정된 Service code 는 다음과 같다.

package com.doo.aqqle.service;

import com.doo.aqqle.element.Site;

import com.doo.aqqle.factory.SiteFactory;

import com.doo.aqqle.factory.YahooFactory;

import com.doo.aqqle.repository.Stock;

import com.doo.aqqle.repository.StockRepository;

import io.vavr.Tuple;

import io.vavr.Tuple2;

import lombok.extern.slf4j.Slf4j;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.select.Elements;

import org.springframework.stereotype.Service;

import java.io.IOException;

import java.time.LocalDateTime;

import java.time.Period;

import java.util.List;

import java.util.stream.Collectors;

@Slf4j

@Service("YahooService")

public class YahooService extends AqqleService implements AqqleCrawler {

public YahooService(StockRepository stockRepository) {

super(stockRepository);

}

private final int CRAWLING_COUNT = 25;

@Override

public void execute() {

Site site = SiteFactory.getSite(new YahooFactory());

for (var i = 1; i < 200; i++) {

int start = i > 1 ? i * CRAWLING_COUNT : 1;

String listUrl = site.getUrl(start, CRAWLING_COUNT);

try {

Document listDocument = Jsoup.connect(listUrl)

.timeout(5000)

.get();

Elements tableTr = listDocument.select(site.getListCssSelector());

List<Tuple2<String, String>> tableTrs = tableTr.stream()

.map(x -> Tuple.of(

x.select("span.symbol").text(),

x.select("span.longName").text()

))

.collect(Collectors.toList());

tableTrs.forEach(tuple -> {

Stock stock = Stock.builder()

.company(tuple._2)

.companyCode(tuple._1)

.exchange("")

.startDate(LocalDateTime.now().minus(Period.ofMonths(6)).toString())

.endDate(LocalDateTime.now().toString())

.type("BUY")

.use("Y")

.build();

stockRepository.upsert(

stock.getCompany(),

stock.getCompanyCode(),

stock.getExchange(),

stock.getStartDate(),

stock.getEndDate(),

stock.getType(),

stock.getUseYn()

);

});

} catch (IOException e) {

e.printStackTrace();

}

}

log.info("DATA 저장 완료.");

}

}

728x90

반응형

'Aqqle > CRAWLER' 카테고리의 다른 글

| [Aqqle] 상품데이터 긁어보자 (2) | 2024.10.08 |

|---|---|

| [Aqqle] Ya후 finance 긁어보자 2 (1) | 2024.09.08 |